|

I'm a third-year Ph.D. student at Cornell Tech, advised by Prof. Andrew Owens. I also collaborate closely with Prof. Antonio Loquercio and Prof. Nima Fazeli. I received my M.S.E. from the University of Michigan in 2025, before transferring to Cornell. Prior to that, I received my B.Eng. and B.Ec. from Shanghai Jiao Tong University in 2023, with an honors degree from Zhiyuan College. During my undergrad, I worked with Prof. Ruohan Gao and Prof. Jiajun Wu at Stanford, and Prof. Yong-Lu Li and Prof. Cewu Lu at SJTU. I mainly work on multisensory learning and robotic manipulation. Email · Google Scholar · Github · Twitter · WeChat |

|

|

|

|

|

|

|

|

Humans perceive the world with multiple senses, based on which we establish abstract concepts to understand it. From the concepts we develop logical reasoning ability, and thus creating brilliant achievements. Inspired by this, my dream is to design human-like multisensory intelligent systems, which can be divided into four specific problems: |

|

(* indicates equal contribution) |

|

Samanta Rodriguez*, Yiming Dou*, Miquel Oller, Andrew Owens, Nima Fazeli CoRL 2025 (Oral) paper · project page We learn to translate touch signals captured from one touch sensor to another, which allows us to transfer object manipulation policies between sensors. |

|

|

Yiming Dou, Wonseok Oh, Yuqing Luo, Antonio Loquercio, Andrew Owens CVPR 2025 paper · project page · code We make 3D scene reconstruction interactive by predicting the sounds of human hands physically interacting with the scene. |

|

|

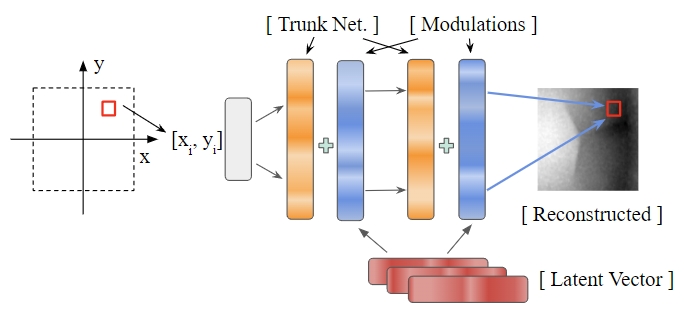

Yiming Dou, Fengyu Yang, Yi Liu, Antonio Loquercio, Andrew Owens CVPR 2024 paper · project page · code We present a visuo-tactile 3D scene representation that can estimate the visual and tactile signals for a given 3D position within the scene. |

|

|

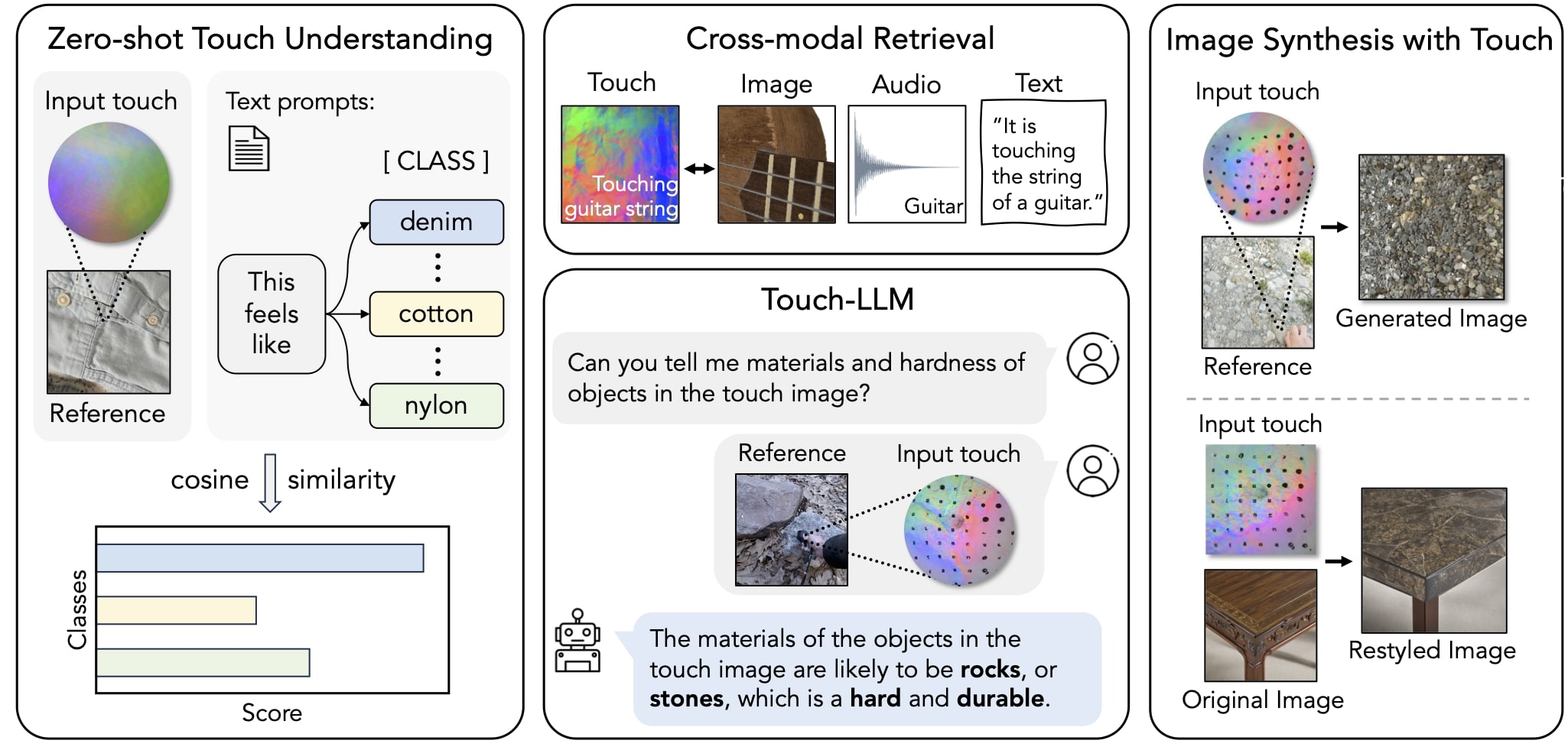

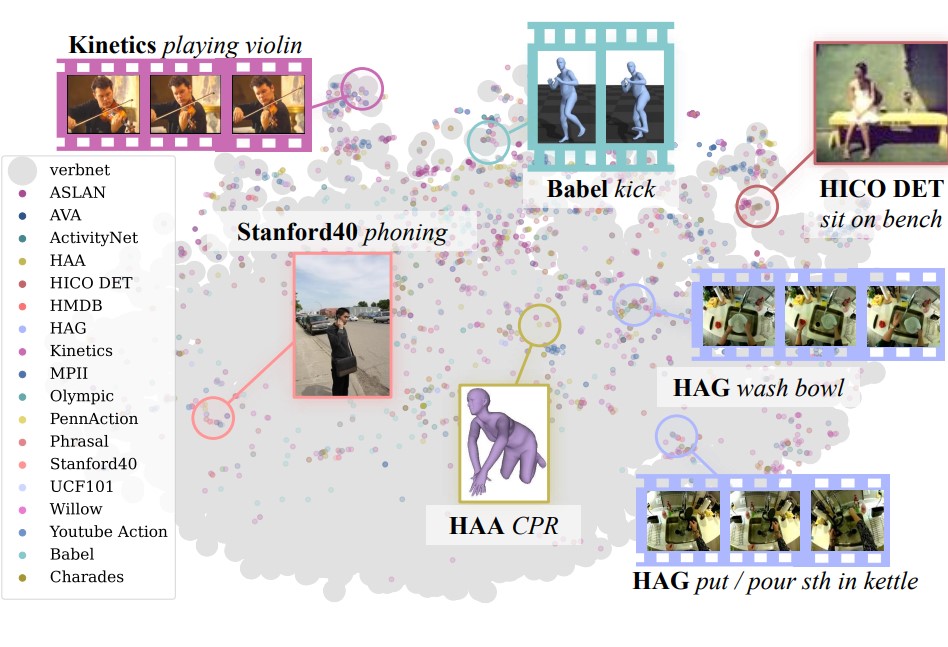

Ruohan Gao*, Yiming Dou*, Hao Li*, Tanmay Agarwal, Jeannette Bohg, Yunzhu Li, Li Fei-Fei, Jiajun Wu CVPR 2023 paper · project page · code · interactive demo · video We introduce a benchmark suite for multisensory object-centric learning with sight, sound, and touch. We also introduce a dataset including the multisensory measurements for real-world objects |

|

|

|

|

|

|

|

|

|

|

|

2025.08 ~ Present New York, U.S. Ph.D. Student in Computer Science Advisor: Prof. Andrew Owens |

|

2023.08 ~ 2025.08 Ann Arbor, U.S. M.S.E. in Computer Science and Engineering Advisor: Prof. Andrew Owens Completed first two years of Ph.D. before transferring to Cornell |

|

2022.03 ~ 2023.04 Stanford, U.S. Visiting Research Intern Supervisor: Prof. Ruohan Gao, Prof. Jiajun Wu and Prof. Fei-Fei Li |

|

2019.09 ~ 2023.06 Shanghai, China B.Eng. (Honors) in Computer Science and Technology B.Ec. (Minor) in Economics Member of Zhiyuan Honors Program Supervisor: Prof. Cewu Lu and Prof. Yong-Lu Li |

|

|

|

|

|

|

|

As a person working on building multisensory systems, I also enjoy being a multisensory embodied agent outside of work: |